Project page for:

- Santiago Cortes, Yuxin Hou, Juho Kannala, and Arno Solin (2019). Iterative path reconstruction for large-scale inertial navigation on smartphones. Accepted for publication in Proceedings of the International Conference on Information Fusion (FUSION). Ottawa, Canada. [arXiv preprint]

Abstract

Modern smartphones have all the sensing capabilities required for accurate and robust navigation and tracking. In specific environments some data streams may be absent, less reliable, or flat out wrong. In particular, the GNSS signal can become flawed or silent inside buildings or in streets with tall buildings. In this application paper, we aim to advance the current state-of-the-art in motion estimation using inertial measurements in combination with partial GNSS data on standard smartphones. We show how iterative estimation methods help refine the positioning path estimates in retrospective use cases that can cover both fixed-interval and fixed-lag scenarios. We compare estimation results provided by global iterated Kalman filtering methods to those of a visual-inertial tracking scheme (Apple ARKit). The practical applicability is demonstrated on real-world use cases on empirical data acquired from both smartphones and tablet devices.

Supplemental material for experiments

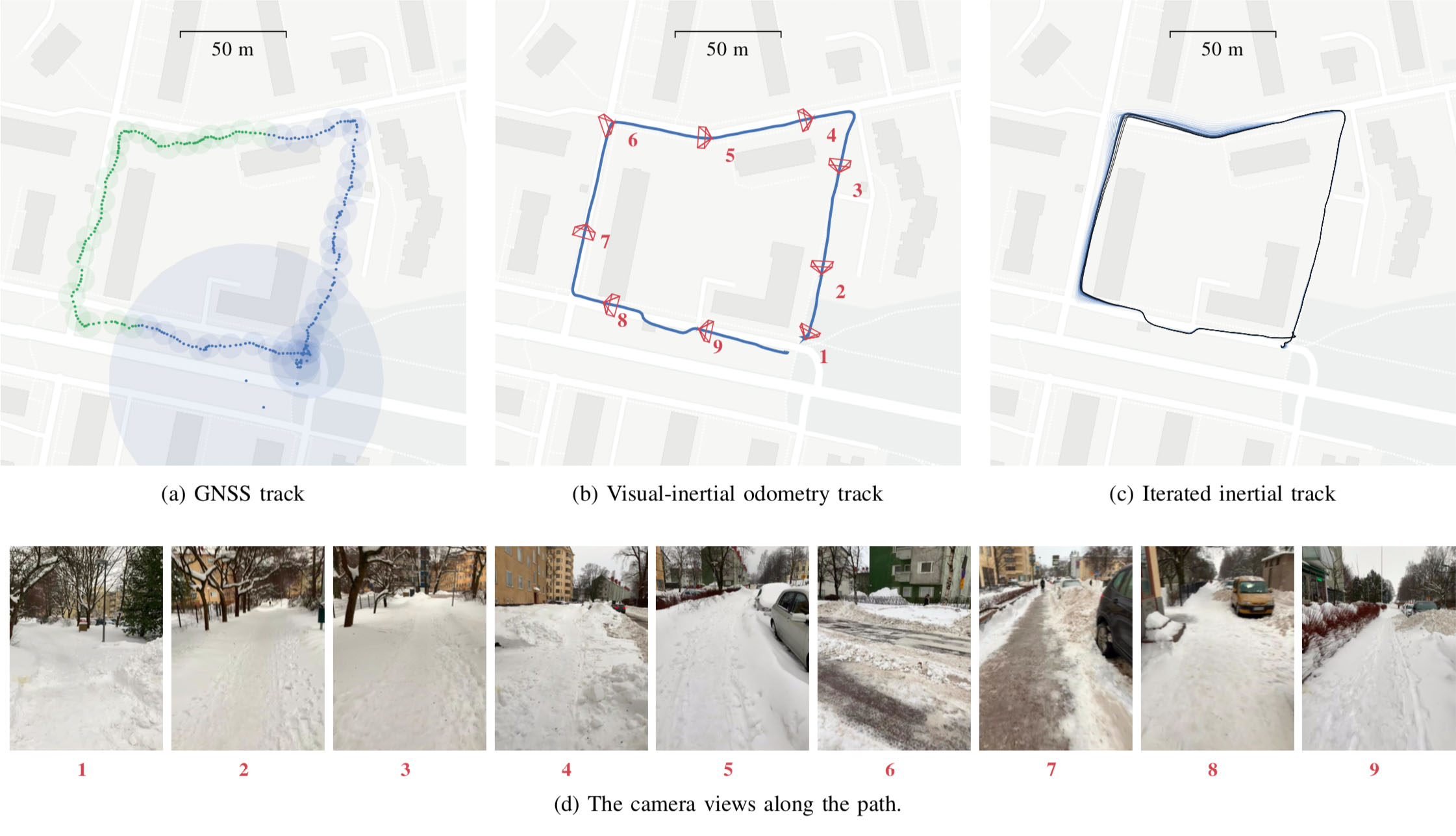

One example path captured by an Apple iPad. (a) GNSS/platform location positions with uncertainty radius. The samples in green were removed for the gap experiment. (b) The visual-inertial odometry (Apple ARKit) track that was captured for reference/validation. The ARKit fuses information from the IMU and device camera. The path has been manually aligned to the starting point and orientation. (c) Our iterative solution of the gap experiment, where we fuse the iPad IMU readings with the blue GNSS locations in (a). Note that the lines straighten along the roads and the corners are square. (d) Example frames along the path showing the test environment. Associated camera poses shown in (b).

The video above shows the visual-inertial odometry (Apple ARKit) track that was captured for reference/validation. The video has been sped-up by 10x. The local shape is very accurate but there is some long-term drift visible in the end of the track. The ARKit track has been manually placed on the map as it does not provide any global (geographic) coordinates.

The GNSS/GPS data provided by the iPad. The accuracy is good only up to some meters. Despite the small-scale/local shape is bad, there is no large-scale drift. The left-out GNSS/GPS locations in our experiment are shown in green as open circles.

Our global iteration progression over 20 rounds of forward-backward sweeps.

Progression of the smoother results over the iterations of filling in the missing GNSS/GPS and correcting the small-scale shape.