Gaussian Process Priors for View-Aware Inference

Project page for:

- Yuxin Hou, Ari Heljakka, and Arno Solin (2021). Gaussian Process Priors for View-Aware Inference. In Proceedings of the Thirty-Fifth AAAI Conference on Artificial Intelligence (AAAI-21), to appear. [arXiv preprint]

Abstract

While frame-independent predictions with deep neural networks have become the prominent solutions to many computer vision tasks, the potential benefits of utilizing correlations between frames have received less attention. Even though probabilistic machine learning provides the ability to encode correlation as prior knowledge for inference, there is a tangible gap between the theory and practice of applying probabilistic methods to modern vision problems. For this, we derive a principled framework to combine information coupling between camera poses (translation and orientation) with deep models. We proposed a novel view kernel that generalizes the standard periodic kernel in SO(3). We show how this soft-prior knowledge can aid several pose-related vision tasks like novel view synthesis and predict arbitrary points in the latent space of generative models, pointing towards a range of new applications for inter-frame reasoning.

Illustrative sketch of the logic of the proposed method: We propose a Gaussian process prior for encoding known six degrees-of-freedom camera movement (relative pose information) into probabilistic models. In this example, built-in visual- inertial tracking of the iPhone movement is used for pose estimation. The phone starts from standstill at the left and moves to the right (translation can be seen in the covariance in (a)). The phone then rotates from portrait to landscape which can be read from the orientation (view) covariance in (b).

Example: View synthesis with the view-aware GP prior

The paper features several examples of the applicability of the proposed model. The last example, however, is best demonstrated with videos. Thus we have included a set of videos below for complementing the result figures in the paper.

We consider the task of face reconstruction using a GAN model. As input we use a video captured with an Apple iPhone (where we also capture the phone pose trajectory using ARKit). After face-alignment, the reconstructed face images using StyleGAN are as follows.

The reconstructions are of varying quality, the identity seems to vary a bit, and the freeze frames are due to failed reconstructions for some of the frames (nearest neighbour shown in that case; failures mostly due to failing face alignment for tilted faces).

The next video shows the GP interpolation result, where we only use the first and last frames in the video and synthesise the rest using a view-aware GP prior in the latent space (using the pose trajectory from ARKit).

From the GP model we can also directly get the marginal variance of the latent space predictions. Using sampling, we project that uncertainty to the image space, which is visualized in the video below. The uncertainty is low for the first and last frame (those are the observations!), but higher far from the known inputs.

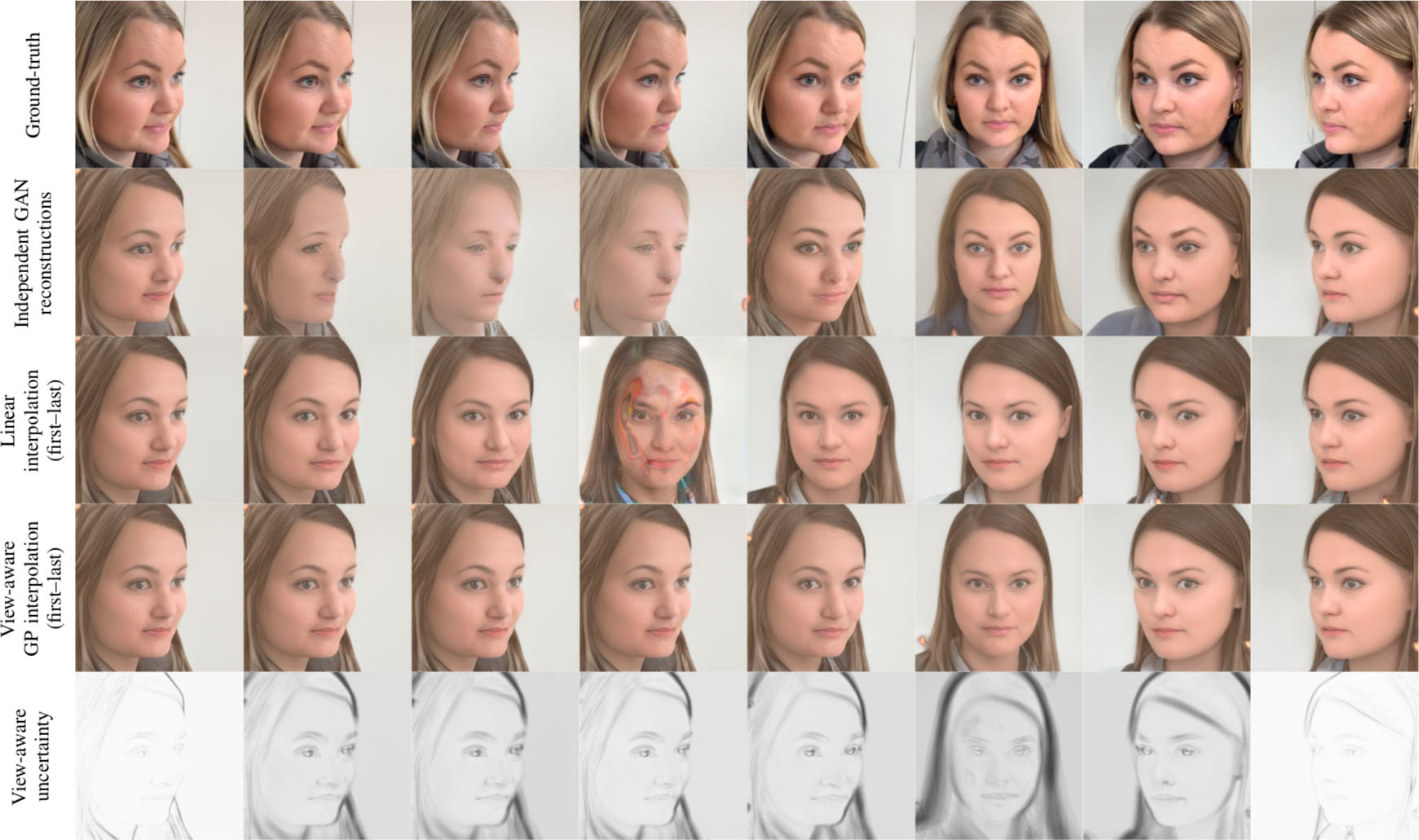

Finally, below are a set of frames summarising the differences between the independent, a naive linear, and the GP interpolated views.

Row #1: Frames separated by equal time intervals from a camera run, aligned on the face. Row #2: Each frame independently projected to GAN latent space and reconstructed. Row #3: Frames produced by reconstructing the first and the last frame and linearly interpolating the intermediate frames in GAN latent space. Row #4: Frames produced by reconstructing the first and the last frame, but interpolating the intermediate frames in GAN latent space by our view-aware GP prior. It can be seen that although linear interpolation achieves good quality, the azimuth rotation angle of the face is lost, as expected. With the view-aware prior, the rotation angle is better preserved. Row #5: The per-pixel uncertainty visualized in the form of standard deviation of the prediction at the corresponding time step. Heavier shading indicates higher uncertainty around the mean trajectory.

Code

The current implementation is available in GitHub.